Every business should upgrade to stay competitive in this digital world. As businesses expand, so does their data. Storing data in the existing legacy systems such as Teradata, Greenplum with less storage capacity is challenging. To overcome this problem data must be moved to a cloud-based systems like snowflake, this is where data migration comes into action. It is the process of transferring data from on legacy data warehouse to snowflake. It can be from storage system, application or data format, involving a lift and Shift process that is extracting, and loading. This process is carried out when there is an upgradation in business.

Disadvantages of Legacy Data Warehouse

- Legacy Platform: Legacy data warehouse uses traditional technologies which may fail to satisfy the requirements of modern business such as enhanced performance.

- Outdated Technology: Inefficient legacy infrastructure such as aging servers and outdated technologies do not work with full potential. Companies are migrating to the cloud to get the advantages of cloud computing technologies.

- Batch-Oriented Processing: Traditional ETL (Extract-Transform-Load) processes run at scheduled intervals, causing delays in data availability. It hampers Real-time insights and to make quick decisions.

Steps Involved in Snowflake Data Migration Process

1. Planning and Assessment:

- Define the Scope: Identify the data that must be migrated, its source and target systems, and the desired outcome expected because of migration process. Expected budget, migration timeline and deadlines are set in this step.

- Analyze Data Volume and Complexity: Understand the size and complexity of the data to be migrated, including its format, structure, and dependencies.

- Choose the Migration Strategy: opt for the best migration strategy based on various factors like downtime tolerance, budget, and technical expertise. Common options include full migration, batch migration, and real-time migration.

- Risk Assessment: Assess the risks to be tackled during migration, such as data loss, downtime, and compatibility issues.

2. Data Preparation:

- Data Profiling and Cleansing: Analyze the data to detect duplicates and errors. Clean the data to ensure accuracy and integrity in the new system.

- Data Mapping: Clarity on how data elements in the source system will map to the target system’s structure and format.

- Test Data Selection: Test the sample of data to identify problems and risks encountered during the migration process. Find solutions to overcome the problem for smooth process.

- Data Backup: Backing up data is a safety measure taken proactively to avoid the risk of data loss due to data migration failure.

3. Extraction and Transformation:

- Extract Data: Use appropriate tools and methods to extract the data from the source system. This may involve copying files, using database queries or specialized migration software. This step also involves the compression of data up to 70 %.

- Transform Data: Modify the data according to the target system’s requirements. This may involve restructuring, reformatting, or applying conversion rules.

4. Loading and Validation:

- Load Data: Transfer the transformed data into the target system. This involves bulk loading, incremental updates, or API integration.

- Data Validation: Verify the accuracy and completeness of the migrated data. Compare it to the source data and run tests to ensure its usability in the new system. Along with the ETL developers, business analysts and system analysts should be hired for smooth data migration. Duration of this process varies based on volume of data.

5. Performance Monitoring:

Monitor the Migration: Data migration is a continuous process to identify errors, performance issues, and unexpected behaviour. Double check if all the data has been migrated completely. Once successfully verified, switch over to the new system and deactivate the old system.

Choosing Data Migration Approaches:

The process of data migration remains same whether big bang approach or trickle approach is followed. Choosing between these approaches depends on business requirements. Here is the quick overview of both the approaches.

1. Big Bang Approach:

It’s a single operation, designed for small companies to handle small volume of data. Migration of data occurs within a short period of time. It’s a cost-effective approach, but the migration failure rate is high and may cause downtime.

2. Trickle Approach:

It involves multiple operations, mainly designed for large scale industries to handle large amounts of data. It’s more expensive and complex to design, but the migration failure rate is low because it involves multiple phases where the process is verified.

Benefits from Data migration to Snowflake:

- Pay-per-Use Model: Snowflake charges only for the resources you use, eliminating the need for upfront infrastructure investments or wasted capacity. This leads to significant cost savings compared to traditional on-premises data warehouses.

- Effortless Scaling: Snowflake’s unique architecture allows you to scale storage and evaluate resources independently. It supports various type of formats like JSON and PARQUET.

- Faster Data Ingestion: Snowflake’s cloud-based architecture enables efficient data loading from various sources, including cloud storage, on-premises databases, and streaming platforms. This translates to quicker access to your data for analysis and reporting.

- Simplified Data Management: Snowflake offers a centralized platform for data storage, processing, and analysis, eliminating the need for complex data silos and integrations. This simplifies data management and improves data accessibility. They manage all type of data from petabytes structured data to semi structured data like JSON, XML, AVRO

- Multi-layered Security: Snowflake employs robust security measures, including encryption at rest and in transit, access control mechanisms, and continuous threat monitoring. This ensures the highest level of data security and compliance with various regulation to keep your data safe. It also obeys GDPR and CCPA regulations. It provides end-to-end encryption for your data communication between both internal and external team keeping data confidential

- Built-in Disaster Recovery: Snowflake’s cloud infrastructure offers built-in disaster recovery features, automatically replicating your data across multiple regions. This ensures business continuity even in case of unforeseen events. Also, their built-in performance reduces complexity to maintain infrastructure leading to zero-maintenance.

Data Migration Best Practices

While conducting data migration process, business should follow some best practices to avoid risk of losing data, financial setbacks, operation disruptions. Following established guidelines, ensures a smooth transition, minimizing downtime and preserving the integrity of your precious information. Here are some best practices to be followed while exercising data migration process:

- Prepare a plan to enhance data quality and overcome existing challenges.

- Make the list of data to be migrated, ensuring only the required quality data is migrated.

- Set up a migration team with specialists to manage the project.

- To keep your data safe, backup the data before the process starts.

- Monitor the process at every stage, from planning to execution, to achieve success of the project

- After the process, check the performance of the new system and optimize it.

- Deactivate the old system after the success of migration process to new system.

Kasmo’s Expertise in Data Migration to snowflake:

Kasmo, Snowflake’s selected partner and Snowflake’s Professional Services team offer various services to stimulate your data migration and ensure smooth and successful implementation.

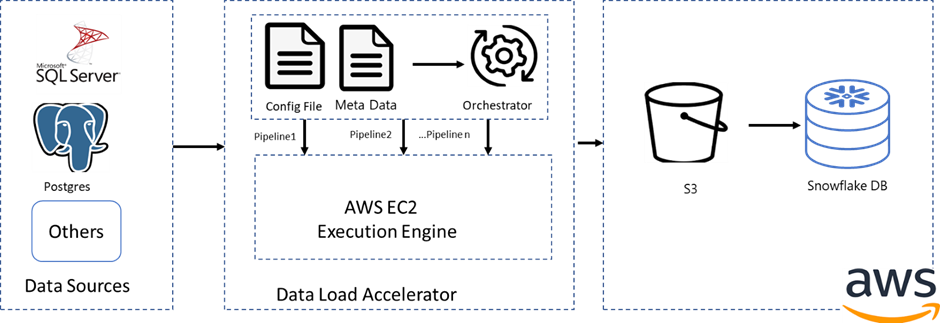

We apply our skills, knowledge and experience to the specific challenges that your organization may face during the migration process. We offer services ranging from high-level architecture recommendations to manual code conversion. Additionally, we have built tools to automate and accelerate the migration process to get full benefits of snowflake

How Kasmo’s Data Migration Accelerators reduces data migration efforts:

Our data migration accelerator reduces the potential errors and downtime that might occur during migrating to snowflake through automation. Here are some of the benefits organizations gets with Kasmo’s data migration accelerators.

Built-in Data Auditing: Tracks all data changes during migration for transparency, accountability, and troubleshooting.

Zero Downtime: Ensures uninterrupted availability of the source system during migration.

Data Integrity: Maintains accuracy and consistency of transferred data through validation and cleansing.

Faster Data Compression: The framework uses Snappy compression, enabling faster compression of files and extraction of data in Parquet format.

Reduced Time to Market: Accelerates migration projects for faster realization of benefits through automation.

Data Reconciliation: Highlights any discrepancies between source and target data for complete consistency at every stage.

Minimal Human Intervention: It automates all processes and requires very little human intervention.

DevOps Templates: Automates provisioning of required services, reducing manual intervention.

Interactive UI: Offers an intuitive interface for seamlessly selecting tables or schemas for migration. It presents comprehensive logs and real-time progress updates during the migration process, elevating the overall customer experience throughout their usage journey.

Data migration may be a difficult task, but with the right process it can be a transformative journey. With proper strategies and best migration practices, your data can reach its destination safely. Kasmo’s data migration services ensure a smooth data migration, which opens the door for new possibilities.

Are you thinking of migrating your business data? Talk to our experts for successful data migration.